Visualising your web browsing history

11 May 2012

TechnologyOriginally posted at https://tech.labs.oliverwyman.com/blog/2012/05/11/web-browsing-history-vis/

I’ve been doing a bit more data visualisation work, with a focus this time on my web browsing. If you’re using Firefox or Chrome (not Opera, as they don’t provide this data), then it turns out that your local history also contains referrer data i.e. you have a history record of not just where you’ve been, but how you got there…

Why is this interesting? Well, because it shows a slightly different view of the web. There’s an awful lot of hyperlinks out there, especially when you get to heavily interconnected places like Wikipedia, but in a way that’s far too much data. Instead, we’ve got some data that shows how you interacted with the web, and if we’ve got a reasonable visualisation tool, then we can learn a few things.

Enter the history crawler. Give it one or more history databases from your browsers (defaults to looking only at the local data, but it can be handed copies from your other machines as well). It then generates Graphviz graphs that show your own personal path through the web, sub-dividing them when we can’t find a history record that connects two subgraphs.

(Side note: I ended up having to use dot, fdp and neato. Dot gave the most useful input, but was most likely to crash repeatedly when I handed it something complex, and fdp and neato made reasonable backup options when that failed so we at least get something out)

So, let’s now have a look at some of the results of this.

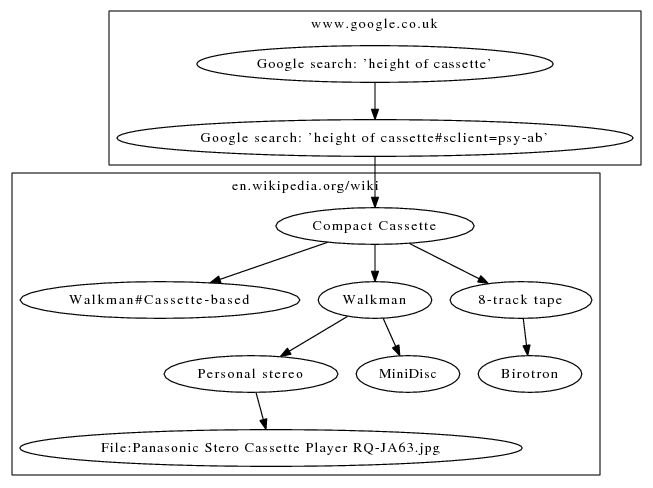

This shows me looking for information on the height of a cassette tape (for reasons that may become clear in a later blog post if a certain item eventually turns up…). It starts with a google search for “height of cassette” and then I clicked on the result for the Wikipedia page for “Compact Cassette”, and then read a bunch of related Wikipedia pages. A couple of items are of note: firstly, the boxes marked “www.google.co.uk” and “en.wikipedia.org/wiki” – these enclose a collection of links with a common prefix, which reduces the amount of extra text needed on screen, and helps to group links. Also, for Wikipedia, and various other similar sites, it happens to give nice labels for pages! For the most part, these nice labels just fall out of the URL parsing, but there’s a couple of special cases for Google URLs as Google searches were common items in my history and the search terms get buried a bit in the rest of the URL. Overall, it’s a pretty interesting snapshot of part of my browsing history.

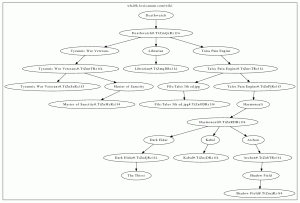

Often the graphs are quite small – there appears to be more disjointness in a browsing session than you’d expect, but some are larger

and some get really messy…

Overall, it was an interesting experiment, and I’ve certainly got a bit more knowledge about how long I spend digging around Wikipedia!